Delivering feature flags with lightning speed and reliability has always been one of our top priorities at Split. We’ve continuously improved our architecture as we’ve served more and more traffic over the past few years (We served half a trillion flags last month!). To support this growth, we use a stable and simple polling architecture to propagate all feature flag changes to our SDKs.

At the same time, we’ve maintained our focus on honoring one of our company values, “Every Customer”. We’ve been listening to customer feedback and weighing that feedback during each of our quarterly prioritization sessions. Over the course of those sessions, we’ve recognized that our ability to immediately propagate changes to SDKs was important for many customers so we decided to invest in a real-time streaming architecture.

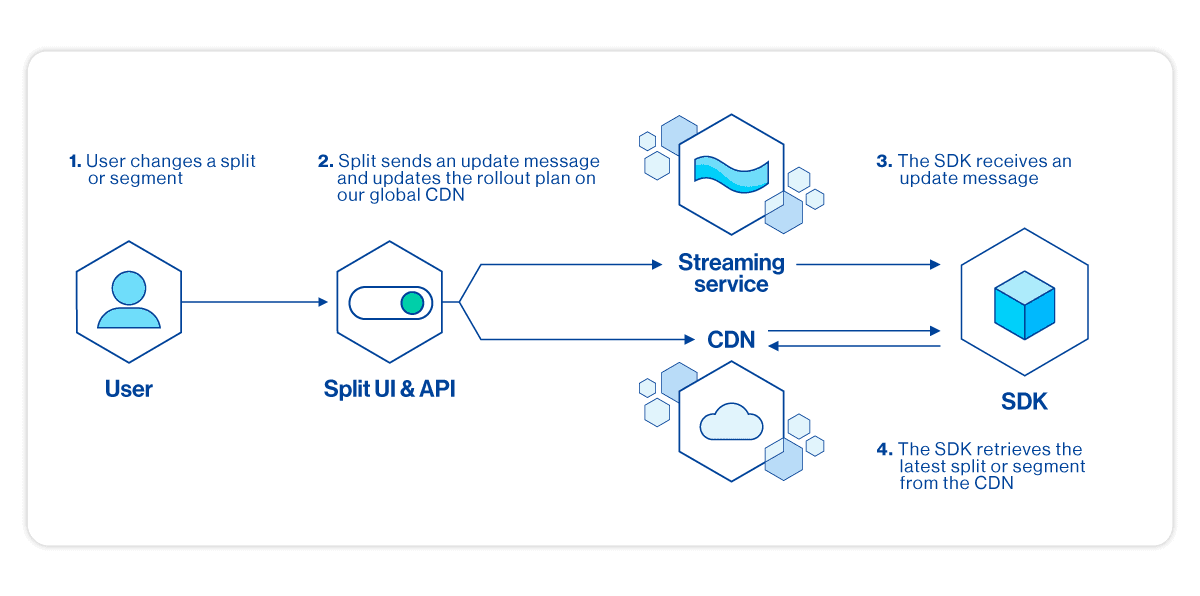

Our Approach to Streaming Architecture

Early this year we began to work on our new streaming architecture that broadcasts feature flag changes immediately. We plan for this new architecture to become the new default as we fully roll it out in the next two months.

For this streaming architecture, we chose Server-Sent Events (SSE from now on) as the preferred mechanism. SSE allows a server to send data asynchronously to a client (or a server) once a connection is established. It works over the HTTPS transport layer, which is an advantage over other protocols as it offers a standard JavaScript client API named EventSource implemented in most modern browsers as part of the HTML5 standard.

While real-time streaming using SSE will be the default going forward, customers will still have the option to choose polling by setting the configuration on the SDK side.

Streaming Architecture Performance

Running a benchmark to measure latencies over the Internet is always tricky and controversial as there is a lot of variability in the networks. To that point, describing the testing scenario is a key component of such tests.

We created several testing scenarios which measured:

- Latencies from the time in which a feature flag (split) change was made

- The time the push notification arrived

- The time until the last piece of the message payload was received

We then ran this test several times from different locations to see how latency varies from one place to another.

In all those scenarios, the push notifications arrived within a few hundred milliseconds and the full message containing all the feature flag changes were consistently under a second latency. This last measurement includes the time until the last byte of the payload arrives.

As we march toward the general availability of this functionality, we’ll continue to perform more of these benchmarks and from new locations so we can continue to tune the systems to achieve acceptable performance and latency. So far we are pleased with the results and we look forward to rolling it out to everyone soon.

Choosing when Streaming or Polling is Best for You

Both streaming and polling offer a reliable, highly performant platform to serve splits to your apps.

By default, we will move to a streaming mode because it offers:

- Immediate propagation time when changes are made to flags.

- Reduced network traffic, as the server will initiate the request when there is data to be sent (aside from small traffic being sent to keep the connection alive).

- Native browser support to handle sophisticated use cases like reconnections when using SSE.

In case the SDK detects any issues with the streaming service, it will use polling as a fallback mechanism.

In some cases, a polling technique is preferable. Rather than react to a push message, in polling mode, the client asks the server for new data on a user-defined interval. The benefits of using a polling approach include:

- Easier to scale, stateless, and less memory-demanding as each connection is treated as an independent request.

- More tolerant of unreliable connectivity environments, such as mobile networks.

- Avoids security concerns around keeping connections open through firewalls.

Streaming Architecture and an Exciting Future for Split

We are excited about the capabilities that this new streaming architecture approach to delivering feature flag changes will deliver. We’re rolling out the new streaming architecture in stages starting in early May. If you are interested in having early access to this functionality, contact your Split account manager or email support at support@split.io to be part of the beta.

To learn about other upcoming features and be the first to see all our content, we’d love to have you follow us on Twitter!

Get Split Certified

Split Arcade includes product explainer videos, clickable product tutorials, manipulatable code examples, and interactive challenges.

Switch It On With Split

The Split Feature Data Platform™ gives you the confidence to move fast without breaking things. Set up feature flags and safely deploy to production, controlling who sees which features and when. Connect every flag to contextual data, so you can know if your features are making things better or worse and act without hesitation. Effortlessly conduct feature experiments like A/B tests without slowing down. Whether you’re looking to increase your releases, to decrease your MTTR, or to ignite your dev team without burning them out–Split is both a feature management platform and partnership to revolutionize the way the work gets done. Switch on a free account today, schedule a demo, or contact us for further questions.